CAVEON SECURITY INSIGHTS BLOG

The World's Only Test Security Blog

Pull up a chair among Caveon's experts in psychometrics, psychology, data science, test security, law, education, and oh-so-many other fields and join in the conversation about all things test security.

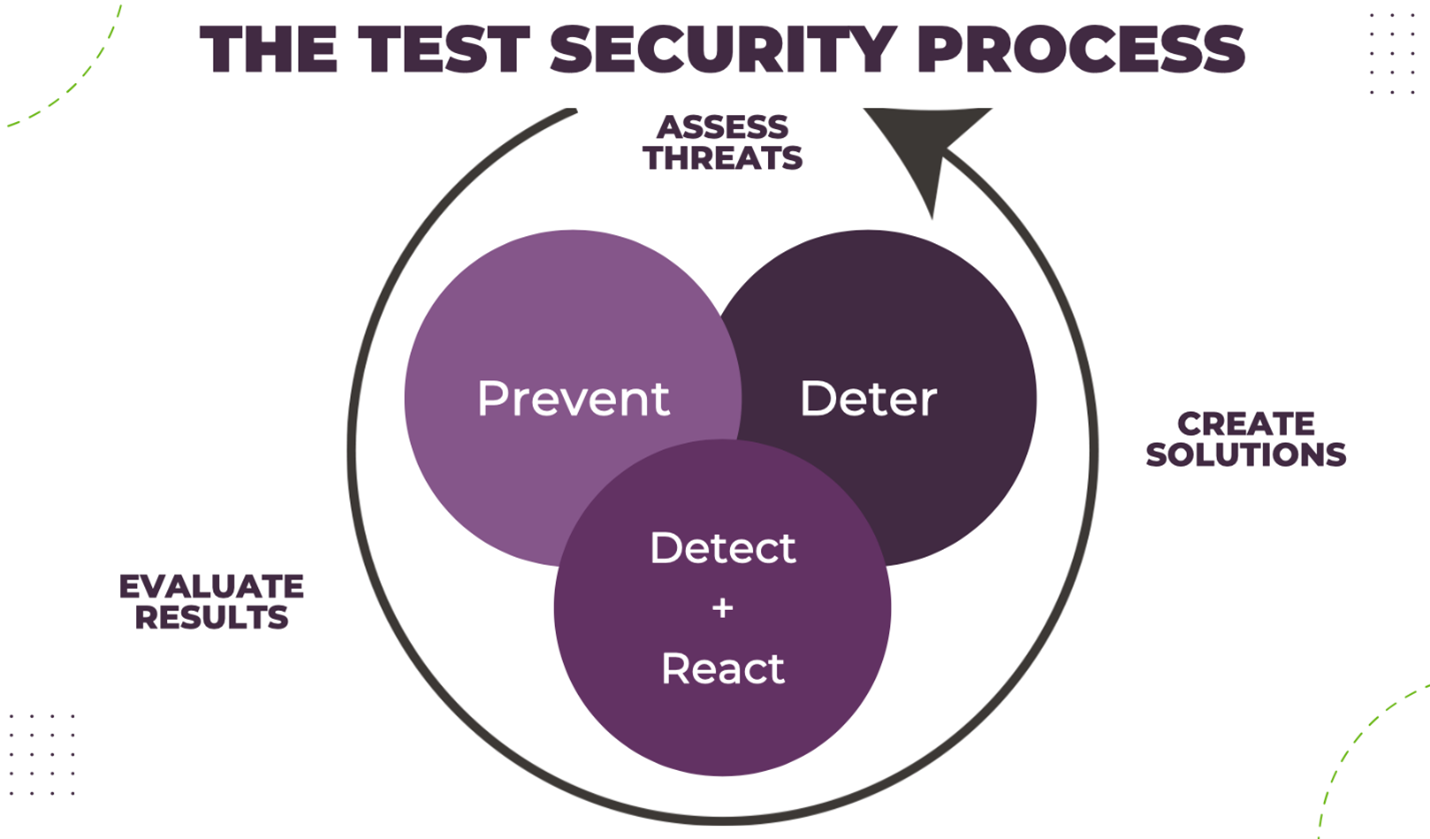

Statewide Assessments: Prevent, Deter, Detect + React to Test Fraud in K-12 Exams

Posted by John Fremer

updated over a week ago

Introduction

It is undeniable that the landscape of statewide assessment has changed in the past several years. From the growing importance of consortia, the increasing recognition of the unfair impact of testwiseness on test scores, to the COVID 19 pandemic necessitating a move to remote learning and test administration, SEAs and LEAs have faced a host of new challenges. These changes have likewise impacted how we ensure test results are valid and reliable. Test security is more important than ever before, and it is necessary to evaluate just what has changed and how we should adapt.

However, before diving into just what is new regarding test security of statewide accountability assessments, it is critical to acknowledge that many of the core tenets of test security remain the same, and many of the guiding principles continue to be as relevant as ever. Despite the changes of the past decade, these principles should remain the foundation of our security efforts. Let’s look at each in turn:

- Prevention

- Deterrence

- Detection

- Reaction (Investigations and Follow-Up)

Prevention

As Benjamin Franklin proclaimed so insightfully, “An ounce of prevention is worth a pound of cure.” Or, in the case of K12 summative testing, a pound of detection and reaction. To the best of their abilities, SEAs and their vendors should implement policies and technologies that help prevent test fraud.

Prevention is defined as actions a program can take to actually stop or reduce the risk of test fraud before it occurs. (An added benefit—while not its primary purpose, preventative solutions can have a significant deterrent effect as well when the scope and effectiveness of those solutions are communicated broadly.) Preventative measures are versatile and can (and should) be implemented at every stage of the assessment process.

The following bullets involve useful recommendations that help prevent test fraud.

- Important best-practice test security guidelines regarding prevention are available to SEAs and LEAs, and each program should implement them as is practical to do so. These best practices are found in the TILSA Test Security Guidebook: Preventing, Detecting, and Investigating Test Security Irregularities (2013) and the revised edition entitled TILSA Test Security: Lessons Learned by State Assessment Programs in Preventing, Detecting, and Investigating Test Security Irregularities (2015). Some of the outlined recommendations include:

- The need for over-arching, inter-department testing handbooks spelling out test security practices and policies

- Comprehensive security training should be undertaken by all involved in the testing process both outside of their job and periodically thereafter

- Ensuring that every role involved in, or affecting test security, is well documented

- The need to complete risk analyses that evaluate threats, vulnerabilities, and the level of loss they may inflict

- Cooperation at the school, district, and state levels, as well as with trusted vendors, results in the strongest test security processes.

- Consortia remain an important factor in state assessments. More and more LEAs and SEAs leverage test content from consortia and/or other vendors. Every entity that utilizes another’s items has a responsibility to help protect that content.

- The time is taken to consider, plan, and document test security incident responses BEFORE a breach occurs. Test Security Incident Response Plans (SIRP) should be drafted, reviewed, approved, and ready to be invoked at a moment’s notice.

- Technology-Based Testing (TBT) continues to offer more opportunities to enhance test security than are being used, just as other technology innovations present new threats. Item and test designs that enhance test security and prevent breaches should be implemented. At the item level, these include Technology-enhanced items; performance items; Discrete Option Multiple Choice items™; and SmartItems™. At the test design level, these innovations include multiple forms; Linear on the Fly tests (LOFT); Computer Adaptive Testing (CAT); and other techniques to minimize item exposure.

- Scale up of test development, as larger item banks yield a host of benefits for programs. More secure test designs, the ability to rapidly replace compromised items, and rapid publishing (and re-publishing) of multiple forms can all be realized when enough items exist. Automated Item Generation (AIG) offers SEAs an efficient, inexpensive means of ramping up item pools.

- Student oaths (that many SEAs and LEAs require students to sign as part of the testing experience, particularly at the point of test administration) and educator agreements should be as clear and explicit as they can be, taking into account the age and language proficiency of the test takers. Clear communication of what is and is not permitted, as well as detailing sanctions for non-compliance, are proven to have powerful impacts on prevention and deterrence alike.

- Change is constant, and test security is an ongoing, continual process. Test security policies, procedures, and materials need to evolve as circumstances change. Methods of prevention should be instituted throughout the testing process, should be re-evaluated frequently, and new methods continually sourced and implemented.

Deterrence

Deterrence as a security principle has not traditionally been discussed with regard to test security practices in statewide assessment. While some aspects of deterrence have been discussed with preventative test security strategies, it is helpful to separate the two and address deterrence as its own security category.

Think of deterrence as a communication plan meant to discourage and inhibit individuals from cheating or committing other forms of test fraud. Remember that deterrence measures are only effective if they are made known, which means they should be announced and published.

A good analogy for thinking about deterrence is the surveillance cameras used in retail stores. Through signage, businesses communicate their security measures that can catch shoplifters in the act, deterring would-be thieves from even attempting to steal merchandise. The same is true of communicating test security policies; by communicating the consequences of being caught cheating, you can deter individuals from ever attempting to commit fraud in the first place.

In addition to offering the greatest return with regard to test security (in addition to prevention), one notable outcome of deterrence is that it lets students, proctors, and administrators, know what is and is not acceptable. The majority of examinees have a sincere desire to honestly take their exams. Having clear guidelines and communicating what is allowed before, during, and after the exam can help honest test takers avoid any “gray” areas when preparing to take their test, providing assistance, or providing information about the test that shouldn’t be shared.

Deterrence typically involves undertaking a series of steps starting with establishing clear rules and ending with publicizing incidents when those rules have been broken and consequences enforced. These steps are:

- Establish clear policies and rules regarding what constitutes unauthorized behavior before, during, and after testing.

- Communicate clearly the rules and expectations to test takers and all others involved in testing. This should occur prior to testing as well as at the start of testing. Remind frequently, as repetition is essential.

- Spell out the consequences for not following the rules.

- Employ all necessary methods to detect if those rules have been violated

- Follow up thoroughly on any test security incidents.

- Publicize actions taken against those who violated the test security rules and policies (while respecting privacy laws and regulations. This final step is critical.

Examples of deterrence include (but are not limited to):

- Communicating to students that:

- No cell phones, other devices, or unauthorized materials are allowed in the testing room.

- Social media platforms and chat rooms are being monitored before, during, and after testing windows.

- Action is always taken when the rules are not strictly followed.

- Communicating to administrators, proctors, and teachers that:

- Testing materials must be securely stored and accounted for, and checks and balances are in place to ensure they are.

- Unauthorized access to secure test content and/or soliciting, receiving, distributing, or using secure test content is in violation of the contract, and action is always taken when those rules are not strictly followed.

- Assisting test takers, pre-filling answers, or manipulating assessment results are in violation of the rules, and measures are in place that enable you to find those occurrences.

As stated above, these communications should be offered numerous times and through a variety of methods—including in-person, via emails, throughout trainings, posted on signage, as part of the test administration instructions, etc.

Detection & Reaction (Investigation & Follow Up)

Detection solutions are designed to detect a test security incident while it is occurring or shortly thereafter. Once an incident is detected, it should be followed with an immediate, specific, and almost automatic pre-determined set of responses.

While the two categories of detection and reaction are often discussed separately, it’s vital to mesh detection and reaction strategies into one smooth and seamless process. Those “automatic” responses have been strategically pre-planned and formulated to stop that specific type of breach from harming your test scores, and they have been custom-developed to quickly and efficiently mitigate any damages that have already occurred.

Please note that while we refer to the follow-up step as “reaction”, it is often called “investigation.” An investigation is one type of reaction a SEA or LEA could undertake in the event of a security incident.

As early as 2013, test security best practices outlined the necessity of checking the adequacy of test security methods on a continuing basis. The two Guidebooks created by TILSA comprehensively covered certain methods of detection, specifically data forensics, and were a recognition of the need to share ideas and information across states and their vendors. There was considerable variation across states as to what issues were addressed and by whom. Some states relied primarily on vendors with or without extensive test security requirements. Others had requests for proposals requiring test security actions and also carried out their own activities, such as regular testing observation programs.

Policies and procedures surrounding the use of detection methods have advanced in the ensuing years both with regard to the use of data forensics to determine the validity of test scores, as well as the use of other detection methods such as patrolling the web for test content as well as conducting test administration monitoring to determine that test security rules and regulations are being correctly implemented and followed.

The following bullets involve useful recommendations regarding detecting and reacting to (investigating) test fraud:

- The need for education in carrying out detection efforts is high and is often recognized with increasing guidance on detection and education methods offered by public and private entities. A few examples include:

- The US Department of Education Peer Review Guidelines

- “Assessment Literacy” efforts by states, some of which are very extensive

- Increase in test security focus at state-level meetings and conferences, such as the National Conference on Student Assessment (NCSA)

- Articles, white papers, and blogs by private entities

- Data forensics is still recognized as necessary to ensuring the validity of test results. While erasure analysis used to be the primary form of data forensics that was conducted on a regular basis, more sophisticated and effective data forensics methods are now available.

- Similarity analyses are recognized as most effective, particularly when combined with other approaches

- The use of web monitoring, while once used only rarely and typically only when a breach was known or suspected, is becoming increasingly widespread. Web monitoring should be used to detect, react to, and minimize the damage from item-disclosure breaches.

- Trade associations and entities including the Council of Chief State School Officers (CCSSO), National Council on Measurement in Education (NCME), US Department of Education (ED), Association of Test Publishers (ATP), and the International Council of Testing (ITC) all prescribe web patrolling as a test security best practice.

- Trade associations and entities including the Council of Chief State School Officers (CCSSO), National Council on Measurement in Education (NCME), US Department of Education (ED), Association of Test Publishers (ATP), and the International Council of Testing (ITC) all prescribe web patrolling as a test security best practice.

- In compliance with US Department of Education Peer Review Guidance, states should undertake test administration monitoring to determine that policies and procedures are implemented and followed correctly, the training of administrators and proctors is effective, and the rules are being followed.

- Real-time monitoring that allows for a quick response is preferable

- Real-time monitoring that allows for a quick response is preferable

- It is always better to use a variety of detection methods rather than relying on a single approach. Data forensics should be combined with web patrol and test administration monitoring whenever possible and should be employed on an ongoing basis.

- Interpretations of test security results are made with extreme care and caution by states

- When it comes to responding to a test security incident, the need for well-defined procedures and trained staff continues to be recognized. As noted previously, the time to develop and vet these procedures is BEFORE an incident occurs.

- States need to have their own comprehensive Security Incident Response Plans (SIRPs) as guides for staff

- Recognition of the need to have a single spokesman as well as defined “lessons” from each test security incident

- The “Handbook of Test Security,” TILSA guidebooks, and other trade association documents are available and provide guidance to states and districts in following up on test security incidents

- Investigations continue to be judged as the most effective form of reaction to a security incident

- When a breach is suspected (or detected), an on-the-ground investigation is usually required to determine the “truth” around the incident.

- Investigations should be undertaken in accordance to established standards.

- Increased recognition of the need for experienced help with investigations

- Public interest in test security breaches and investigations continues to be high and continue to be reported of detected breaches and steps taken in a variety of media outlets.

- Public interest can continue for long periods, e.g. Atlanta Public Schools, college admissions testing, etc.

- It is essential to follow up on the results of investigations—SEAs and LEAs sweep issues “under the rug” at their own peril.

John Fremer

View all articlesAbout Caveon

For more than 18 years, Caveon Test Security has driven the discussion and practice of exam security in the testing industry. Today, as the recognized leader in the field, we have expanded our offerings to encompass innovative solutions and technologies that provide comprehensive protection: Solutions designed to detect, deter, and even prevent test fraud.

Topics from this blog: K-12 Education

Posts by Topic

- Test Security Basics (34)

- Detection Measures (29)

- K-12 Education (27)

- Online Exams (21)

- Test Security Plan (21)

- Higher Education (20)

- Prevention Measures (20)

- Test Security Consulting (20)

- Certification (19)

- Exam Development (19)

- Deterrence Measures (15)

- Medical Licensure (15)

- Web Monitoring (12)

- DOMC™ (11)

- Data Forensics (11)

- Investigating Security Incidents (11)

- Test Security Stories (9)

- Security Incident Response Plan (8)

- Monitoring Test Administration (7)

- SmartItem™ (7)

- Automated Item Generation (AIG) (6)

- Braindumps (6)

- Proctoring (4)

- DMCA Letters (2)

Recent Posts

SUBSCRIBE TO OUR NEWSLETTER

Get expert knowledge delivered straight to your inbox, including exclusive access to industry publications and Caveon's subscriber-only resource, The Lockbox.